An Aussie woman who has South Asian heritage claims she couldn’t make bookings through Airbnb because the rental platform’s AI couldn’t match two images of her. If you’ve read about AI biases before, then you’d know that this is actually a common — and persistent — issue.

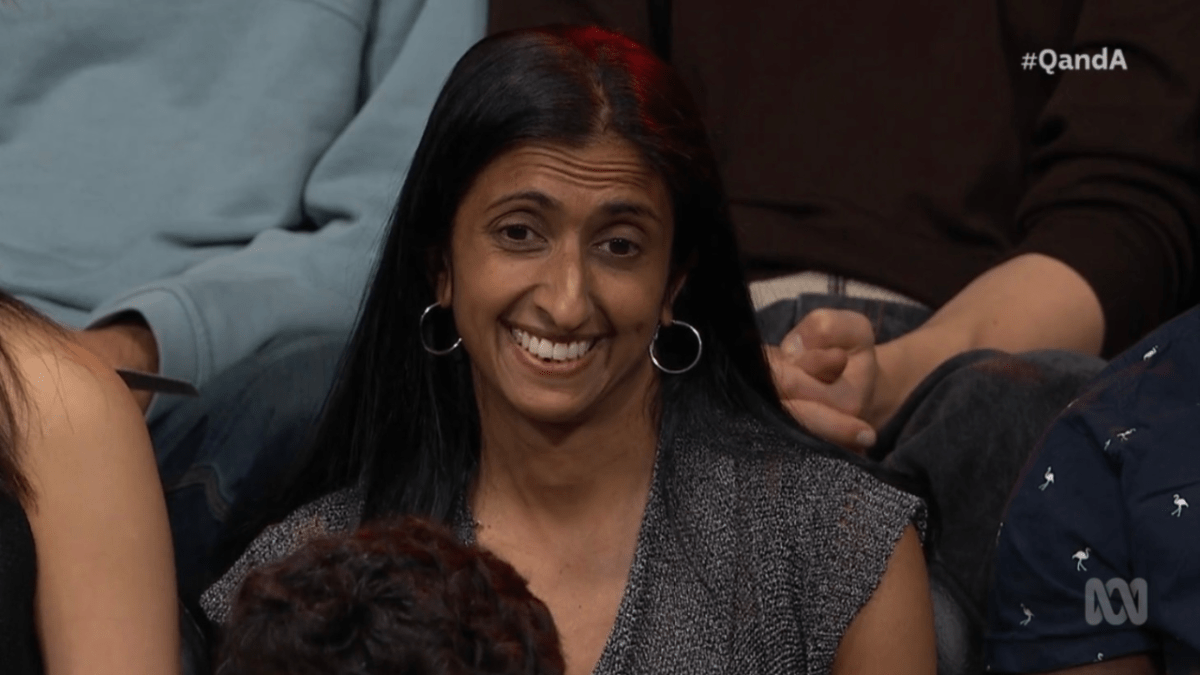

Francesca Dias, from Sydney, recalled her issues with the service on an episode of ABC’s Q+A. She told the panel that she couldn’t make an account with Airbnb because she failed its AI’s identity verification process.

“So recently, I found that I couldn’t activate an Airbnb account basically because the facial recognition software couldn’t match two photographs or photo ID of me and so I ended up having my white male partner make the booking for me,” she said.

One of the ways Airbnb confirms the identity of users is by matching a photo of you from your government ID (like a passport or drivers licence) to a selfie it asks you to take. Alternatively, it’ll ask for two pictures of you from various government ID and then try to match them.

While Airbnb says on its website that the AI won’t always get it right, Dias noted that it *did* work for her partner. And yes, that’s probably because he’s white.

Racial biases in AI and algorithms have existed since the inception of this (kinda) new technology. You may have come across examples of racial biases in AI before: remember the viral “racist soap dispenser” that would dispense soap for white people but not Black people?

It’s not so much that the soap dispenser was actually racist, but that there is a pervasive issue of tech only really being trained on images of white people — which means once these technologies are released into the world, they are woefully inept at handling faces or skin colours that aren’t familiar to them (so basically, non-white people).

Another more recent — and larger scale — example of this is Uber’s identification algorithm, which functions in a similar way to the one Airbnb uses.

In 2021, dozens of Black and brown Uber and Uber Eats drivers took to the streets (and to court) after they were booted from accessing their accounts (and basically lost their jobs) following the company’s facial recognition software failing to recognise them.

According to the Independent Workers’ Union of Great Britain (IWGB) and Uber drivers, the Microsoft Face API software specifically had issues authenticating the faces of people with darker skin tones.

Uber vehemently denied any accusations of racism, and I’m genuinely sure no one at all expected this to be an issue — because really it’s not the company that’s created these inequalities, but the algorithm which was made by a third party.

But that’s kind of the problem, hey? The point remains that AI in general and across all industries (not just these companies) continues to be pretty bad at recognising dark skin tones, likely because the people creating these algorithms don’t train them to recognise a diverse set of people.

The issue here then, is who do we actually hold responsible when users of services, whatever those services are, find themselves potentially being discriminated against by flawed algorithms? And to go back to Francesca Dias’ question on Q+A, how do we avoid AI bias and reinforcing discrimination in the first place?

“Often society does not have good representation of the full population in its datasets, because that’s how biased we’ve been historically, and those historical sets are used to train the machines that are going to make decisions on the future, like what happened to Francesca,” AI expert and founder of the Responsible Metaverse Alliance Catriona Wallace, who was speaking on Q+A‘s panel, said.

“So it is staggering that this is still the case and it’s Airbnb. You would think a big, international, global company would get that shit sorted, but they still haven’t.”

CSIRO chief executive Doug Hilton suggested that until it was in a business’ financial interest to make sure these issues didn’t exist, they would persist, and said algorithmic discrimination should be treated as not a technical or capitalistic problem, but a “regulatory” problem.

“We have racial discrimination laws and we should be applying them forcefully, so that it is in Airbnb’s interest to get it right,” he said.

“We know actually technically how to fix this, we know how to actually make the algorithm [work].”

AI is increasingly becoming an inalienable part of our lives, so these issues need to be fixed now more than ever.