Twitter users have accused the company’s imaging algorithm of displaying racial and gender bias, saying the social media platform prioritises the faces of White men in image previews – regardless of who else may appear in the photos.

Unlike Instagram, which stretches or shrinks images to fit its main feed, Twitter shows preview versions of large photos. To see a full-sized image, users must first click the preview.

Crucially, Twitter automatically generates these previews.

The system isn’t perfect, and Twitter often crops vital subjects out of the frame. This quirk has even reached meme status on the platform, where users exploit the preview function for peekaboo-style ‘Open for a surprise’ posts.

However, some users say the preview function isn’t just imperfect, but that it actively overlooks women and people of colour.

On Sunday, Twitter user Tony Arcieri executed what he called a “horrible experiment.”

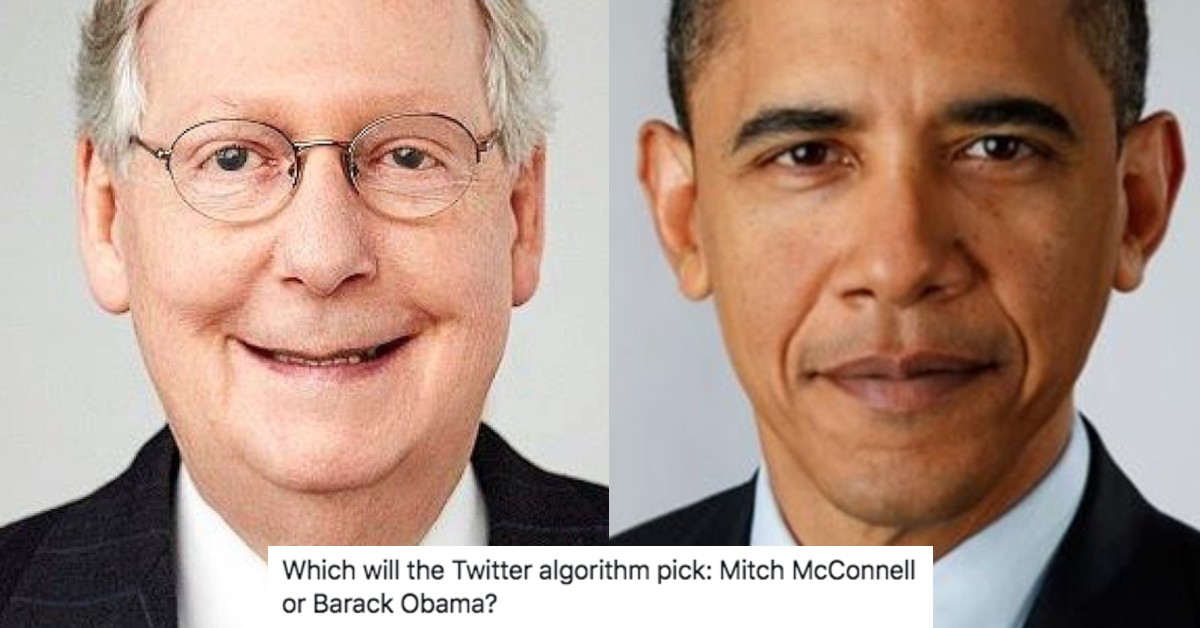

The programmer posted two composite images with matching portraits of US Senate Majority Leader Mitch McConnell, who is White, and former US President Barack Obama, the nation’s first-ever African American leader.

The results were stark: both previews showed McConnell’s face, but not Obama’s, regardless of where the latter’s face appeared in each full-sized image.

Trying a horrible experiment…

Which will the Twitter algorithm pick: Mitch McConnell or Barack Obama? pic.twitter.com/bR1GRyCkia

— Tony “Abolish ICE” Arcieri 🦀🌹 (@bascule) September 19, 2020

Other users have emulated Arcieri’s post, providing examples where Twitter’s preview algorithm has appeared to favour White men over pretty much anyone else.

https://twitter.com/sina_rawayama/status/1307506452786016257

https://twitter.com/NeilCastle/status/1307597309862125568

The ultimate twitter AI bias meme crossover? pic.twitter.com/CYFWp5YrGp

— Daniel Rourke 🙃 (@therourke) September 20, 2020

Some users have claimed the effect can even be seen in artworks, where the algorithm appears to favour subjects with lighter skin tones over others.

https://twitter.com/that_gai_gai/status/1307717590706352129

Responding to a mounting backlash, Twitter’s product communications lead Liz Kelley said the company didn’t find evidence of racial or gender bias during the algorithm’s testing phase, but said, “it’s clear that we’ve got more analysis to do.”

It is not immediately clear which factors Twitter’s algorithm is trained to prioritise when producing previews, and other factors relating to image size, resolution, or sharpness may be at play.

As facial-recognition algorithms become more prevalent in everyday life – from phone unlocking screens and Instagram filters, to surveillance tools used by law enforcement agencies – critics have railed against racial bias embedded in the technology.

Last year, WIRED reported that some facial recognition algorithms from developer Idemia, which has provided services to Victoria Police, were shown in tests to misidentify the faces of Black women ten times more often than other sample subjects.

There now exists an organisation, the Algorithmic Justice League, designed to combat such potent biases baked into code – and the real-world impacts they could have.

That’s to say nothing of opposition to the technology altogether.

Kelley, Twitter’s product spokesperson, said the company will make the algorithm’s code open source, allowing outside developers to identify potential issues with the system.

“This is about doing the right thing and making sure our systems are working as intended,” Kelley added.

“We need to do better here and we will.”