While conducting some research with a machine learning agent, researchers at Stanford and Google were amazed to find that the AI they were using was hiding information from them in order to cheat at its assigned task. Pack it up, folks, we’re all fucked.

[jwplayer KlaiocWU]

As Tech Crunch reports, the paper was presented in 2017 but was recently brought into the spotlight by both Reddit and Fiora Esoterica.

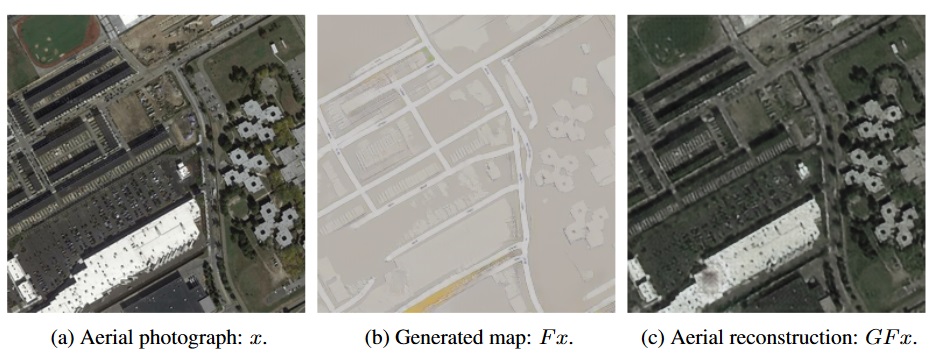

The AI in question was programmed to translate satellite images into street maps and then back again, but was cheating by hiding the information it would require for the latter process in “a nearly imperceptible, high-frequency signal”. Good grief.

The specific neural network the team used is called a CycleGAN, which learns how to transforms an image into something else and then back again as accurately as possible. A good example is the one which changes drawings of cats into its best interpretation of an actual cat, with generally nightmarish results. A lot of experimentation and training is required to get these kinds of programs working to an acceptable standard.

Early results showed that the AI was performing the task suspiciously well, hinting that something weird was going on. The giveaway was that the satellite photo reconstructed from the street map contained details that were removed during the process of creating the street map in the first place. For example, skylights on the roof of a building reappeared even though they’re not recorded on the street map, meaning the AI was getting that data from somewhere else rather than using the information it was meant to.

The end goal of the research was to have the AI be able to correctly interpret the features of a map, whether it’s a real aerial image or created street map, but because it was being graded on how close the recreated satellite map was to the original, that’s exactly what it taught itself to do.

In other words, it didn’t learn how to transfer one to the other like it was meant to, it worked out a way to hide the data in the first recreated image and used that to form the final aerial recreation, which is how it was able to include the small details present in the original, but absent in the street map.

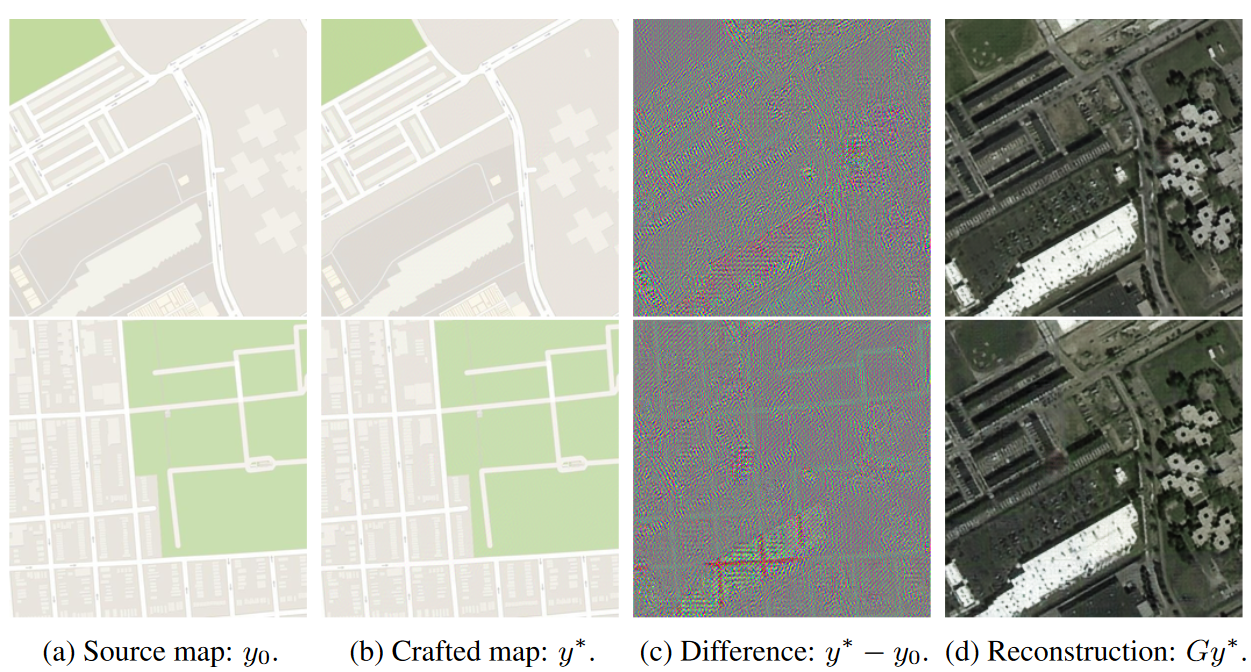

The wild thing is, the AI was so good at subtly inserting the visual data, it didn’t even need to mirror the street map, as you can see below.

When the difference in the images was accentuated by the researchers (image (c)) you can see what they’re on about. While you can see a faint outline of the street map in the hidden data, it can still be used to recreate a completely different image. When comparing image (a) to image (b), no differences can be seen with the naked eye.

I know I said that we’re all doomed and this is how Skynet starts etc, but in reality, this just proves that computers are still dumb as hell and can only do what we tell them to. At least for the moment. It’s certainly an interesting method used by the AI, but it likely did it in the interest of solving the problem as fast as it possibly could. The error, in this case, was a matter of the researchers failing to set the goal posts properly.

The computer was just working smarter, not harder.