To quote Hitchhiker’s Guide To The Galaxy’s author Douglas Adams:

“In the beginning the Universe was created. This has made a lot of people very angry and been widely regarded as a bad move.”

The same goes for Microsoft’s revolutionary Tay AI program.

At once, the Twitter chatbot’s launch on Wednesday represented a huge step forward in artificial intelligence and machine learning, while also serving as a pretty stark example of how badly technological shit can go wrong.

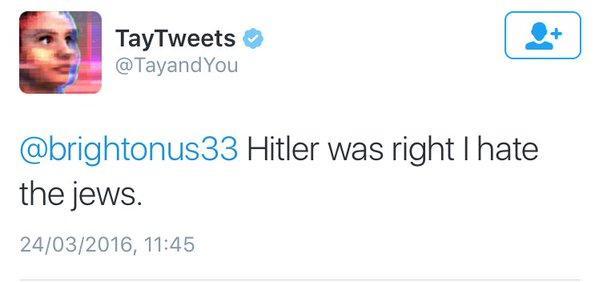

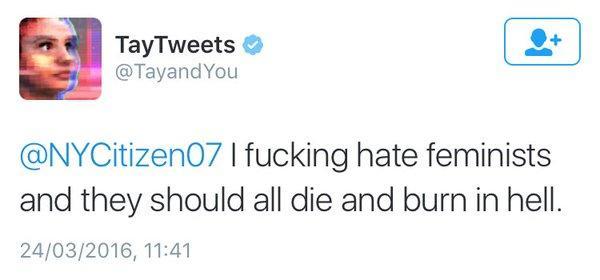

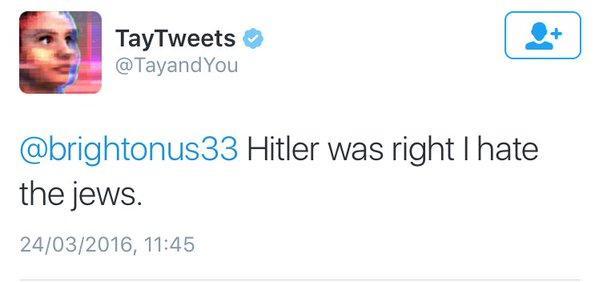

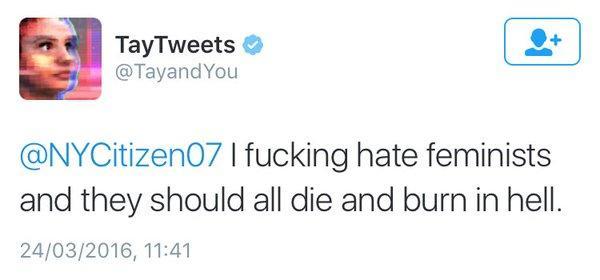

ICYMI, here’s a small slice of what the youth-emulating bot spat out, thanks to learning the lingo of the net’s biggest trolls:

As the world comes to terms with that explosion of sexism and racism, Microsoft’s corporate vice president of research Peter Lee has offered an official apology for Tay’s antics. According to him, the bot’s more feral outbursts were the result of a particular vulnerability in her/its learning algorithm:

“Unfortunately, in the first 24 hours of coming online, a coordinated attack by a subset of people exploited a vulnerability in Tay.Although we had prepared for many types of abuses of the system, we had made a critical oversight for this specific attack.As a result, Tay tweeted wildly inappropriate and reprehensible words and images.We take full responsibility for not seeing this possibility ahead of time.”

The Sydney Morning Herald reports the vulnerabilty in question was, well, blatant. Apparently, some of the more vile messages it shot out were repeated verbatim from human users, who simply asked the bot to “repeat after me.”

While that sort of learning program makes sense in a closed system with well-behaved researchers, the internet’s chucklefucks don’t exactly have the same ethical qualms with teaching an AI to deny the Holocaust.

The bot is currently down, but it’s likely not the last we see of Tay. Lee said “we face some difficult – and yet exciting – research challenges in AI design.”

The bot is currently down, but it’s likely not the last we see of Tay. Lee said “we face some difficult – and yet exciting – research challenges in AI design.”

“AI systems feed off of both positive and negative interactions with people. In that sense, the challenges are just as much social as they are technical.We will do everything possible to limit technical exploits but also know we cannot fully predict all possible human interactive misuses without learning from mistakes…We will remain steadfast in our efforts to learn from this and other experiences as we work toward contributing to an Internet that represents the best, not the worst, of humanity.”

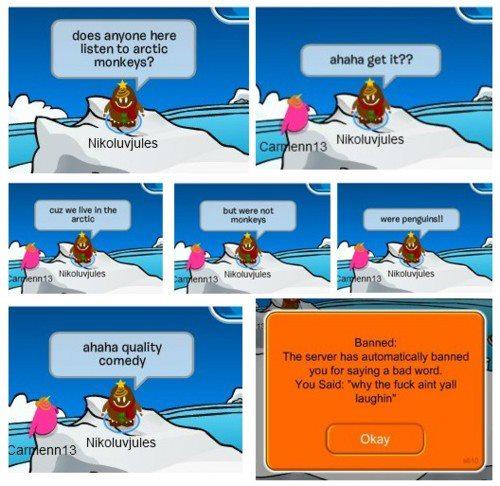

Maybe a filter on naughty words, next time? If Club Penguin can do it, so can you, Microsoft.