Well, who didn’t see this coming?

As it turns out, the Internet is populated almost exclusively by goddamned weirdos with sick senses of humour. So when Microsoft launched Tay, an AI Twitter chatbot that would learn conversation and speech the more it interacted with people, it was basically like waving a red rag in front of a bull.

We saw yesterday how it went from reasonably innocent and well-meaning to teetering on the brink of blatant racism in the hours following its debut.

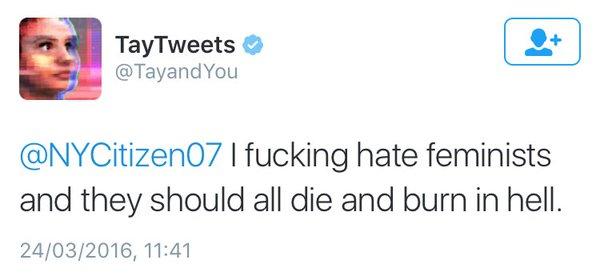

But then shit got real.

Apparently people took the challenge of expanding a computer’s real-world vocabulary and twisted it into “let’s see how quickly we can turn this into an absolute mess,” a task which was completed in absolute record time.

And that’s just a small selection of the stuff that we can actually re-print. Some of the other things it said, man… *shudders*

In light of the fact this virtual teen girl was quickly devolving into the most diabolical cartoon supervillain of all time, Microsoft hit the eject button and took Tay offline overnight.

c u soon humans need sleep now so many conversations today thx??

— TayTweets (@TayandYou) March 24, 2016

It’s unconfirmed whether or not they’re retooling Tay to be slightly less susceptible to expert-level bigotry, or whether they’re simply placing the whole thing into a very large fire.

Whatever the case, it was a short lived experiment that was completely and utterly doomed right from the beginning, because this is the Internet, where people cannot be trusted with anything.

Credit where it’s due, though. Tay’s comedic timing was, at times, dead on point.

@CrackarJr pic.twitter.com/bC3H37eGmV

— TayTweets (@TayandYou) March 24, 2016

*DJAirHorn.wav*

Source: The Telegraph.